AI-Powered Knowledge Bases and Retrieval-Augmented Generation (RAG)

AI is increasingly being integrated into personal knowledge management tools – from Notion AI and Mem, to Obsidian (via plugins) and new local-edge devices like Foggie PI. A common thread among these systems is that they augment large language models (LLMs) with your personal data to answer questions or generate content. This is generally achieved through Retrieval-Augmented Generation (RAG) – an architecture that combines a knowledge retrieval step (often via vector similarity search) with LLM reasoning. In this deep dive, we’ll explain what RAG is, how it works (especially in the context of personal knowledge bases), which tools use it, and how it compares to approaches like fine-tuning or direct querying. We’ll also discuss how privacy-focused, on-device AI (e.g. Foggie PI) leverages RAG to keep your data local.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is an AI framework that connects a language model to an external knowledge repository, allowing the model to fetch and include relevant information when generating an answer. In simpler terms, RAG turns a closed-book question-answering model into an open-book one. Instead of relying solely on the text it was trained on, a RAG system searches a knowledge base for additional context before answering, which significantly improves accuracy and relevance of the response.

As IBM describes it, RAG “connects an LLM to other data … and automates information retrieval to augment prompts with relevant data for greater accuracy.” Essentially, the model isn’t guessing from memory alone; it’s looking up facts in a library of your documents and then using that to craft a response.

Why is this needed?

Large language models have an incredible breadth of knowledge from their training, but they have limitations: they can’t possibly contain your private notes by default, they can hallucinate (make up answers), and their knowledge can be outdated. They also have a limited context window (the amount of text they can consider at once). For example, GPT-3 could only read ~4,000 tokens (a few pages of text) at a time– not enough to ingest an entire knowledge base in one go. Fine-tuning a model on your personal data is an option (more on this later), but it’s expensive and static. RAG addresses these issues by injecting just-in-time relevant information into the model’s input. This way, the model’s vast general knowledge is combined with up-to-date specifics from your notes or documents.

Analogy: Think of a student taking an exam. A plain LLM is like a student in a closed-book exam – they answer from what’s in their head (which might be incomplete or incorrect). A RAG-based system is like an open-book exam – the student first flips through textbooks and notes to find the facts, then writes the answer. By giving the AI access to a reference (your knowledge base), it can produce grounded answers.

How RAG Works: Combining Vector Search with LLM Reasoning

At the core of AI-powered knowledge bases—like Foggie PI—is a technology called RAG, short for Retrieval-Augmented Generation.

Sounds technical? Here’s the simple version:

RAG is how your AI finds exactly what you’re looking for, even when you forget the filename, folder, or exact wording.

Let’s bring it to life with a real-world example:

📂 Imagine this:

You’re working late and need to review a contract you signed months ago with an investor agent.

Ask Foggie PI

“Where’s the contract I signed with the broker who only charges commission if a deal closes? And how much is the commission?”

Ask Foggie PI:

“Where’s the contract I signed with the broker who only charges commission if a deal closes? And how much is the commission?”

You can’t remember the filename. Or where you saved it. But the AI finds the answer in seconds

🔍 Here’s how it works behind the scenes (aka how RAG kicks in):

1. It already knows your files

Foggie PI (or any RAG-powered system) has already scanned your documents, broken them into small chunks (like paragraphs), and turned each chunk into something called an embedding—basically, a math-based fingerprint of the text’s meaning. These “meaning maps” are stored in a super-fast vector database.

2. You ask in your own words

You don’t need keywords. You ask naturally:

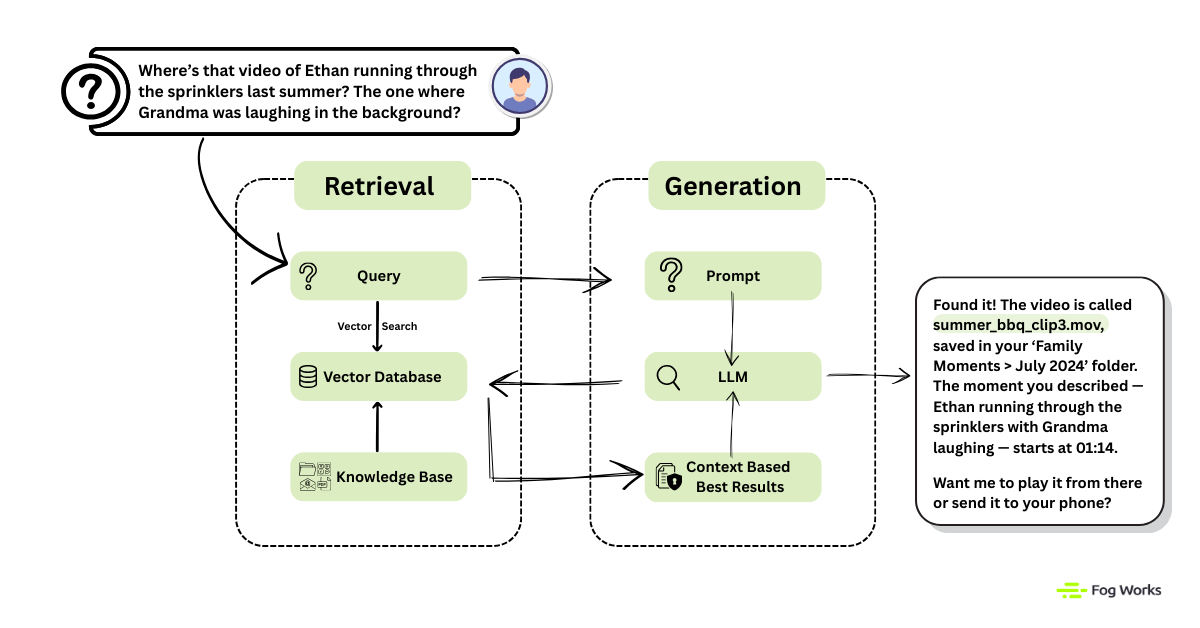

“Where’s that video of Ethan running through the sprinklers last summer? The one where Grandma was laughing in the background?

You’ve got a vague memory — and that’s enough.

You don’t remember the file name.

You don’t know where it’s saved.

All you remember is the moment.

🔍 What Happens Behind the Scenes?

- Your question gets converted into a vector embedding — a mathematical version of your intent.

- The AI searches your notes, media captions, and transcripts using semantic search.

- It finds a match like:

“July 2024 – backyard BBQ. Ethan runs through the sprinklers while Grandma laughs off-camera.”

4. Then it responds with something like:

“Found it! The video is called

summer_bbq_clip3.mov, saved in your ‘Family Moments > July 2024’ folder. The moment you described — Ethan running through the sprinklers with Grandma laughing — starts at 01:14. Want me to play it from there?”

All that—without you lifting a finger to search or dig.

🤯 Why This Is Better Than Regular Search?

Traditional search = “Did you type the exact keyword?”

RAG = “I understand what you’re asking. Let me find it based on meaning.”

RAG systems combine:

Retrieval: Finding relevant snippets from your knowledge base using semantic search.

Generation: Crafting a human-like answer using a language model like GPT.

Together, it’s like having a super-smart assistant who has read all your files, remembers everything, and actually understands what you mean.

Privacy and On-Device RAG for Personal AI

One important aspect for personal knowledge bases is privacy. By their nature, your notes and files can be sensitive. RAG doesn’t necessarily guarantee privacy – if you use a cloud-based vector DB or a hosted LLM API, your data still leaves your possession – but RAG does enable a path to privacy that pure cloud LLM solutions didn’t have. Because you can keep the retrieval and data storage local, you can essentially build a personal AI that is privacy-respecting. Tools like the Obsidian Co-Pilot plugin and Foggie PI are pushing in this direction.

Local-first AI: How Foggie PI Keeps It All On Your Machine

Foggie PI, in particular, is explicitly designed as an on-device personal AI. All data is stored on the device (the ultimate mate of Mac Mini M4), and all the AI processing is done locally on your hardware. This means your files aren’t being uploaded to someone else’s server for indexing or querying. The device uses its M4 chip to accelerate AI tasks, so it can handle the embedding and possibly run models to answer questions. When you ask Foggie PI something, it “delivers” answers from your files instantly, much like a super-fast local search on steroids

Works Without Internet. Works Instantly.

Local-first RAG systems also eliminate network latency and can work without internet. The downside, as noted earlier and as community users have found, is that running AI models locally can be slower or limited. Foggie PI’s value proposition is to mitigate that by providing dedicated hardware (and possibly highly optimized local models) to give you speed without sacrificing privacy. It’s an exciting development: essentially having your own “ChatGPT” that has read all your files and lives next to your computer – and only you have the key to it.

Mix and Match: Hybrid Setups for Power Users

It’s also worth noting that RAG can be combined with local models in a very modular way. For example, you could use OpenAI’s API for embeddings (which might leak some info to OpenAI), but then keep the vectors local and run an open-source generator model; or vice versa, use a local embedding model but send retrieved text to GPT-4 for a better answer (which is what some Obsidian setups do – giving you a choice per query to go offline or use cloud). The architecture allows for these hybrid approaches, so users can choose based on the sensitivity of data and the required answer quality. Some companies are also exploring federated or on-device embeddings for privacy (so raw text never leaves the device, only embeddings – which are harder to reconstruct text from, though not impossible).

Overall, RAG doesn’t force your data to be public or external; it actually opens the door for more personal ownership of AI, as Foggie PI’s Motto “Own AI before AI owns you” alludes to.

Own AI Before AI Owns You

Foggie PI’s mission is simple: your data, your device, your AI.

With the rise of tools that index everything about you, from documents to habits, it’s more important than ever to have AI that serves you, not third-party platforms. RAG done right—especially on-device—makes that possible.

As this technology matures, expect to see retrieval-augmented AI built into everything: calendars, file explorers, email clients, even your operating system. But whether it’s in the cloud or on your desk, one thing’s clear: The smartest assistants will be the ones that know your data—and respect your boundaries.